May 2025

Author

Shwet Sharvary

Lead Design Consultant, Fractal BxD

Arkaprabha Dey

Senior Design Consultant, Fractal BxD

Contributors

Raj Aradhyula

Chief Design Officer, Fractal BxD

Ramchand Matta

Principal Design Consultant, Fractal BxD

You’ve likely heard the promises: AI will revolutionize your business, drive efficiency, and deliver unparalleled insights. But here’s the reality — without the right foundation, AI can create more problems than it solves. Projects stall, tools go unused, and employees struggle to trust systems that don’t seem built for them.

The key to unlocking AI’s true potential lies in designing for humans, not just systems. This begins with a Decision-Backward Approach, which centers on understanding how people make decisions in their roles and building AI tools that align with those needs. By starting with the user’s context — what actions they need to take and the challenges they face — we design solutions that are transparent, safe, and easy to use, empowering teams to achieve their goals seamlessly.

“Knowing how best to apply design principles for AI products requires an understanding of the current challenges and gaps organizations face when creating and implementing tools.”

The core challenges AI products face

Making AI work means addressing the challenges that prevent adoption and success. Organizations often struggle with deeply interconnected issues, creating roadblocks that can undermine even the most advanced initiatives.

Low user adoption

Even the most innovative AI tools fail when they’re too complex or don’t align with users’ workflows. For example, an AI-powered customer support assistant might offer predictive text or sentiment analysis, but if it doesn’t integrate seamlessly with the user’s interface or fails to consider team-specific needs, employees will abandon it in favor of familiar methods.

Lack of context awareness

AI tools that fail to adapt to situational complexities risk frustrating users. For instance, consider an AI sales assistant designed to streamline negotiations. If it doesn’t surface real-time client data — like past purchase history, ongoing discussions, or current promotions — sales reps lose trust in the tool’s usefulness.

Ethical and bias concerns

Bias in AI outputs can undermine trust. Take an AI-powered hiring tool, for example. If the system disproportionately favors certain demographic groups, even unintentionally, it not only damages the company’s reputation but also discourages users from relying on it for critical hiring decisions.

Scalability challenges

Scaling AI systems often exposes reliability gaps. For example, a predictive analytics tool that performs flawlessly in small-scale tests might falter under enterprise-wide deployment. Seasonal trends, regional data variations, or unique market demands, and other contextual data can overwhelm systems that weren’t designed to grow with the organization.

Overpromising, under-delivering

AI is often marketed as a “magic bullet.” For instance, a financial dashboard app might promise personalized insights but struggle to analyze complex transaction patterns, leading users to view its suggestions as superficial or redundant.

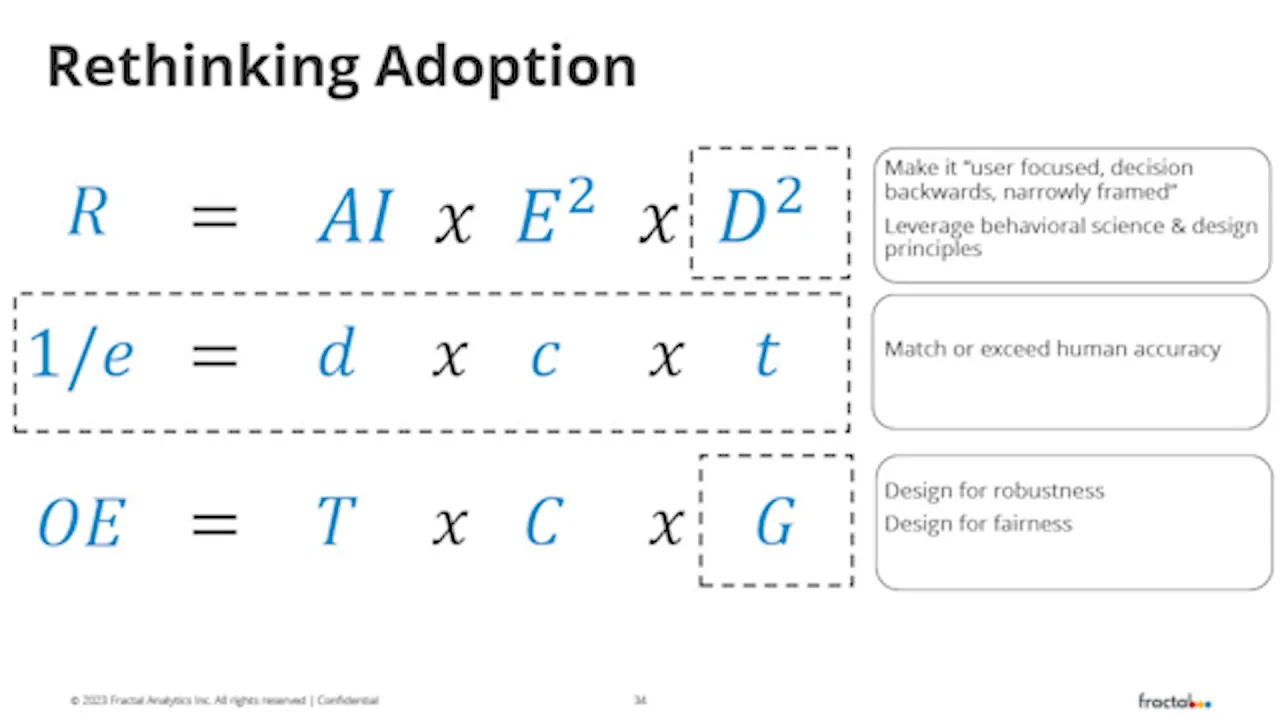

The decision-backward approach

Addressing these gaps requires a shift in how we design AI. Our Decision-Backward Approach ensures that AI tools are created with people, not just processes, in mind.

This approach starts by asking key questions:

What decisions do users need to make?

What actions do they need to take?

What barriers prevent them from achieving their goals?

By answering these questions, we design solutions that integrate seamlessly into workflows, anticipate needs, and empower users to make informed decisions. This user-first approach is the foundation for AI that works for humans.

A Framework: AI for Humans

Our framework builds on the Decision-Backward Approach and our understanding of people behavior to address product challenges with six human-centric design principles:

Create context aware systems: Construct systems with comprehensive awareness of context, utilizing situational and historical insights for complete user perspectives.

Evolve with user's maturity: Adapt complexity and guidance to match user's experience level and growing expertise and iterate with user’s feedback.

Elevate human's abilities: Empower users to do more than ever, by amplifying user's creativity and capability that delivers innovative tools and insights, thereby improving human's effectiveness.

Make human in charge: Help users recover from errors by enabling corrections and maintaining user control over system decisions, including the ability to override AI actions, when necessary, to ensure accuracy and user confidence.

Explain AI decisions: Make the AI's decision-making process clear to users, ensuring they can understand, trust, and effectively interact with the system while upholding ethical standards and fairness.

Seamlessly blend AI into workflows: Design interfaces to actively facilitate natural and intuitive interactions with AI that enable accessibility, taking into account the user's familiarity with AI behavior. Seamlessly integrate GenAI features into existing interfaces.

By focusing on these principles, the framework ensures that AI solutions are not only technically advanced but also intuitive, empowering, and aligned with users’ real-world needs.

Redefining AI design

AI’s potential lies in its ability to empower people. But achieving this requires more than just technical sophistication — it demands tools designed with humans at their core.

Related Reads

Elevating human abilities through context-aware, user-centric AI solutions>>

Recognition and achievements