Feb 2026

Author

Nimish Devasthali

Principal Architect

Ankit Sajwan

Senior AI engineer

Driving real-time insurance decisioning with generative AI and Azure ML–based RAG

Insurance enterprises manage vast volumes of policy documents, endorsements, and claims records. Retrieving accurate and up-to-date policy information from these repositories is often slow and error-prone, impacting customer support, claims processing, and underwriting decisions.

Retrieval-Augmented Generation (RAG) addresses this challenge by combining semantic search with large language models (LLMs). Built using Azure Machine Learning, our RAG solution enables insurance teams to access precise, context-aware answers in real time, without manual document searches.

Azure ML RAG architecture for insurance document intelligence

The Azure ML–based RAG architecture integrates multiple Azure AI services to deliver a scalable, secure, and production-ready solution for the insurance domain.

Insurance documents are ingested from Azure Blob Storage, where unstructured PDFs and policy files are processed using Azure Form Recognizer to extract high-quality textual data. The extracted text is intelligently chunked and transformed into vector embeddings using Azure OpenAI embedding models.

These embeddings are stored in Azure AI Search, enabling fast and relevant semantic retrieval across large insurance document repositories.

Intelligent policy retrieval using prompt flow and GPT-4o

When an insurance agent submits a query, such as policy coverage, exclusions, or claim eligibility, Azure ML Prompt Flow orchestrates a hybrid search across Azure AI Search. The system retrieves the most relevant policy sections and augments the user query with contextual information.

This enriched prompt is passed to GPT-4o, which generates accurate, grounded, and explainable responses. The result is an LLM-powered insurance chatbot that delivers consistent, policy-aligned answers in seconds.

Business impact of RAG for insurance operations

By combining automated retrieval with generative AI, the Azure ML RAG pipeline significantly improves operational efficiency. Manual document searches are eliminated, response accuracy improves, and average query resolution time is reduced significantly.

This enables insurers to handle complex, regulation-heavy inquiries with confidence, accelerating claims processing, reducing risk exposure, and improving customer satisfaction in a highly regulated environment.

Azure services used in the RAG pipeline

Azure Blob Storage

Centralized storage for insurance policies and claims documentsAzure Form Recognizer

Extracts structured text from PDFs and scanned policy documentsAzure OpenAI Models

Generates embeddings and responses using GPT-4oAzure AI Search

Stores and retrieves vector embeddings for semantic policy searchAzure ML Studio

Orchestrates indexing and RAG workflows using Prompt Flow and SDKsAzure ML Monitor

Monitors performance, response quality, and model drift

Key components of the Azure ML RAG pipeline

Indexing pipeline for insurance documents

The indexing pipeline prepares insurance documents for retrieval by chunking text, generating embeddings, and storing vectors in Azure AI Search. This creates a high-quality semantic knowledge base for downstream queries.

RAG chatbot pipeline for insurance queries

In the RAG chatbot flow, user queries are converted into embeddings and matched against indexed policy vectors. The most relevant policy chunks are retrieved, combined with the query, and sent to the LLM to generate the final response.

Also read: Next frontier: AI driven decision making in insurance

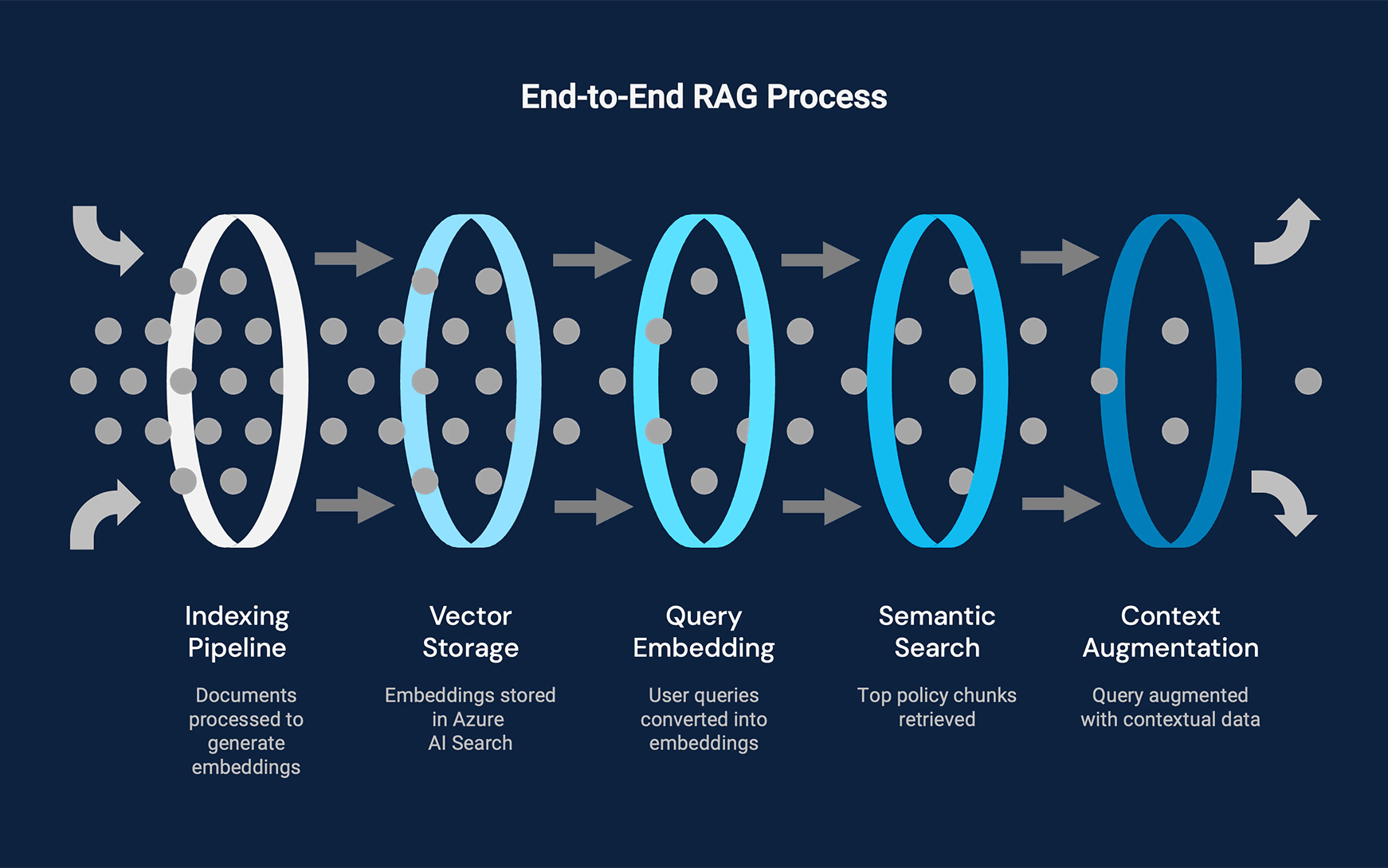

End-to-end RAG process Flow in Azure ML

Upload insurance documents to Azure Blob Storage

Run the indexing pipeline to generate embeddings

Store vectors in Azure AI Search

Convert user queries into embeddings

Retrieve top policy chunks using semantic search

Augment the query with contextual data

Generate responses using GPT-4o

Return results via an integrated web application

Benefits of Implementing RAG in Azure ML

Full Machine Learning Lifecycle Management

Experimentation, deployment, monitoring, and governancePython-Centric Customization

Flexible development using Azure ML SDKs and Prompt FlowAdvanced Evaluation and Experimentation

Continuous optimization of retrieval accuracy and LLM responsesEnterprise Cost Control and Governance

Secure, compliant, and scalable AI operations

Conclusion: Enabling Scalable Generative AI for Insurance

Retrieval-Augmented Generation in Azure ML transforms how insurers access and apply enterprise knowledge. By unifying semantic search with generative AI, insurers can deliver faster, more accurate, and context-rich responses at scale. This approach modernizes insurance workflows and lays the foundation for resilient, AI-driven decision-making in a regulated industry.

Recognition and achievements