Operationalizing and scaling machine learning to drive business value can be challenging. While many businesses have started diving into it, only 13% of data science projects actually make it to production. Moving from the academics of ML to real-world deployment is difficult, as the journey requires finetuning ML models to fit the practical needs of a business and ensuring the solution can be implemented at scale.

Many organizations struggle with ML operationalization due to a lack of data science and machine learning capabilities, difficulty harnessing best practices, and insufficient collaboration between data science and IT operations.

Common challenges with ML operationalization

Many organizations get attracted to buzzwords like “machine learning” and “AI,” and spend their development budgets pursuing technologies rather than addressing a real problem. ML projects are an investment, and obstacles in operationalizing the solution make it even harder for the business to realize value from these solutions.

Here are some common ML operationalization bottlenecks and the solutions to tackle them.

- Lack of communication, collaboration, and coordination: Proper collaboration between the data scientist team and other teams, like business, engineering, and operations, is crucial. The ML project may not add real-world business value without proper alignment and feedback.

- Lack of a framework or architecture: When ML models lack the proper framework and architecture to support model building, deployment, and monitoring, they fail.

- Insufficient infrastructure: ML models use vast data to train the model. Most of the time is spent preparing data and dealing with quality issues without the proper infrastructure. Data security and governance are crucial factors that must be considered in the initial phase.

- The trade-off between prediction accuracy and model interpretability: Complex models are generally harder to interpret but provide more accurate predictions. The business must decide what’s an acceptable tradeoff to get a “right-sized” solution.

- Compliance, governance, and security: The data science team may not always consider other issues like legal, compliance, IT operations, and others that occur after the deployment of ML models. In production, setting up performance indicators and monitoring how the model can run smoothly is important. So, understanding how ML models run on production data is a crucial part of risk mitigation.

Unfortunately, many ML projects fail at various stages without ever reaching production. However, with the correct approach and a mix of technical and business expertise, such as that provided by Fractal’s data science team, it is possible to avoid or quickly resolve many of these common pitfalls. Fractal can help organizations deploy more ML models to production and achieve a faster time to value for ML projects with the tools, practices, and processes of MLOps.

Starting with the business objective

Fractal’s proven MLOps methodology helps streamline and standardize each stage of the ML lifecycle from model development to operationalization. It allows collaboration between technical and non-technical users and empowers everyone to participate in the development process actively.

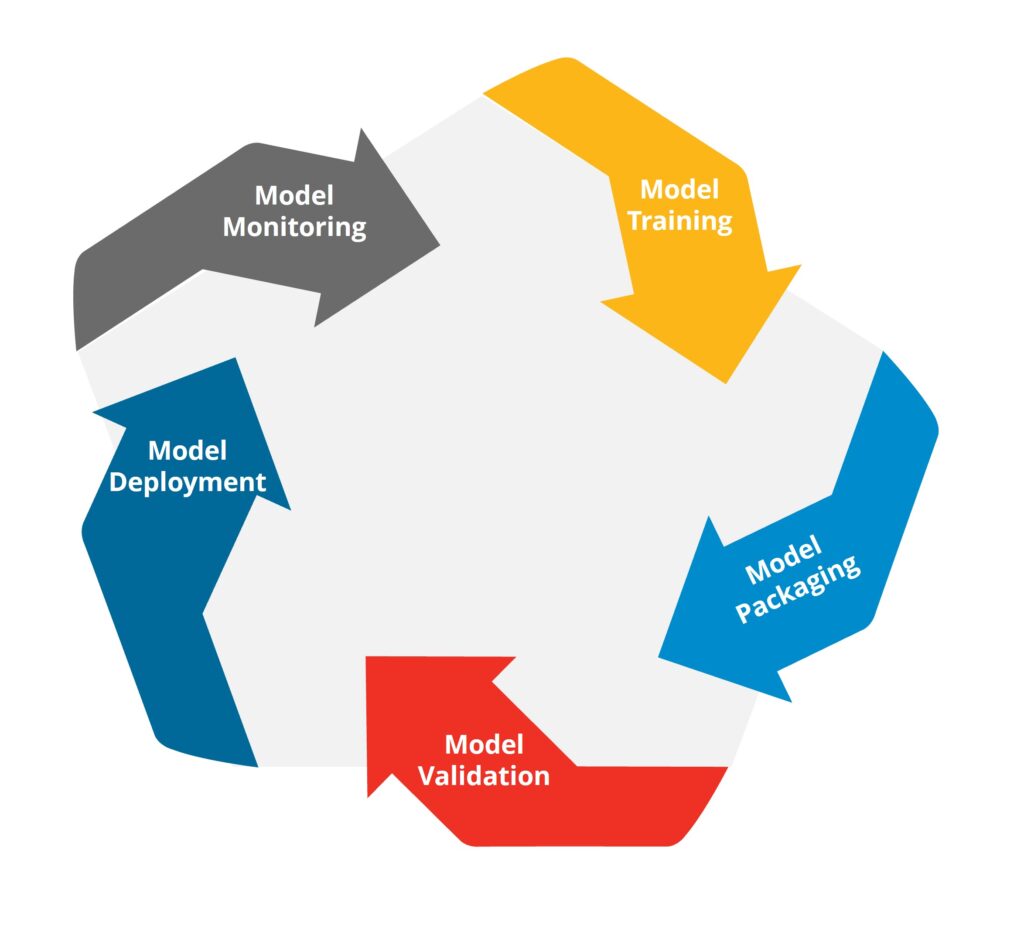

We have helped many organizations leverage MLOps, allowing them to overcome their challenges. It includes a process for streamlining model training, packaging, validating, deployment, and monitoring to help ensure ML projects run consistently from end to end.

Our successful 5-stage model

- Train: We create and train an initial model based on available data, business requirements, and desired outcomes.

- Package: Once the model is trained, we package up the model to make it easy to test, iterate, and deploy at scale.

- Validate: Later, we help validate models by measuring candidate models against predefined KPIs, deployment testing, and testing application integrations.

- Deploy: On validating, we deploy models by identifying the deployment target, planning the deployment, and then deploying the models to their production environment. We ensure that the services are implemented to support scalability, data pipelines are automated, and a model selection strategy is implemented.

- Monitor: Finally, we monitor models to track behavior, continuously validate KPIs, measure accuracy and response times, watch for drift in model performance, and more.

Google Cloud services for ML model deployment

We can help teams successfully deploy and integrate more ML models into production and achieve a faster time to value for ML projects with more efficient model training using Google Cloud services such as:

- Google Cloud Storage: It enables organizations to store, access, and maintain data so that they do not need to own and operate their own data centers, moving expenses from a capital expenditure model to an operational expenditure.

- Cloud Functions: It provides a simple way to run code responding to events with minimal configuration and maintenance. Cloud Functions are event-driven, meaning they can be triggered by changes in data, new messages, and user interactions.

- Big Query: A fully managed enterprise data warehouse helps you manage and analyze your data with built-in features like machine learning, geospatial analysis, and business intelligence.

- Kubernetes engine: A solution to help organizations achieve zero ops. Kubernetes is an open-source container orchestration system for automating software deployment, scaling, and management.

- Data Proc: It is a fully managed and highly scalable service for running Apache Hadoop, Apache Spark, and 30+ open-source tools and frameworks.

- Vertex AI: It is a machine learning platform that lets you train and deploy ML models and AI applications and customize large language models (LLMs) for use in your AI-powered applications frameworks.

Leveraging both business and technical expertise

Our ML model lifecycle unifies data collection, pre-processing, model training, evaluation, deployment, and retraining to a single process that teams maintain. It allows businesses to quickly tackle obstacles faced by the data scientists and IT operations team while providing a mix of technical and business expertise.

How Fractal’s methodology benefits organizations

- Eliminates guesswork

- Supports consistency

- Enables continuous packaging, validation, and deployment of models to production

- Rapid time to value

- Accelerate time-to-value and time-to-deployment

- Efficiently manage data error and model performance

- Increase model scalability during training and serving

Conclusion

As we continue through 2023, the MLOps market is surging rapidly. As ML applications become a key component for maintaining a competitive advantage, businesses realize they need a systematic and reproducible way to implement ML models. According to the analyst firm Cognilytica, MLOps is expected to be a $4 billion market by 2025. Fractal has deep expertise in MLOps and can help deliver solutions for unique business challenges across virtually all industries and sectors.

Ready to begin leveraging MLOps in your organization? Contact us to get started.