Nowadays, companies want to be able to test business decisions and ideas at a scale large enough to believe the results but also at a scale small enough to reduce the large investments and risks that come with full-scale execution.

Trial Run helps conduct tests such as altering store layouts and remodeling, loyalty campaigns, and pricing to recommend the best possible tailored rollout to maximize gains. You can now implement new ideas with minimal risk and maximum insight with the power of business experimentation. Trial run helps you:

- Test each business idea at scale to generate customer insights without excessive spending.

- Find out why your customers behave the way they do.

- Learn how your customers will react to your new big idea.

What is Trial Run?

Trial Run is a data-driven, cloud-based test management platform used to test business ideas for sites, customers, and markets. Trial run is built using Amazon EKS, Amazon Redshift, Amazon EC2, Amazon ElastiCache, and Amazon Beanstalk. It is intuitive for beginners and experts alike and helps companies scale experimentation efficiently and affordably.

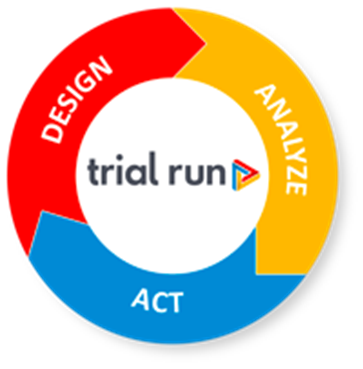

Trial Run supports the entire experimentation lifecycle, which includes:

- Design: Build a cost-effective and efficient experiment that gives you the data you need to proceed with confidence.

- Analyze: Work with variables that provide you with targeted and actionable insights.

- Act: Use the generated insights to ensure your new rollout provides your stakeholders with the precise ROI.

Trial Run offers valuable support across various operational and administrative departments, including Retail, Consumer Packaged Goods (CPG), and Telecommunications.

Through its scientific and methodical testing approach, Trial Run can uncover fresh perspectives and guide decision-making through a range of tests, including:

- Marketing and merchandising strategies.

- Enhancing the in-store experience.

- Examining store operations and processes.

These tests are carried out at the store operations and process, product, or consumer levels.

Trial Run offers a dynamic, affordable, and modern way of experimentation so you can stay relevant in a rapidly changing business environment. Trial Run also helps you to drive experiments through:

- Driver Analysis: Identify key factors that are significant in driving the business outcomes

- Rollout simulator: Maximize the ROI of a campaign

- Synthetic Control Algorithm: Determine the right number of control stores with appropriate weights to create the replica of the test store

- Experiment calendar: Avoid overlaps in experiments

- Clean search: Let Trial Run parse the experiment repository and find entities that are available for a test

What you can expect from Trial Run

- Graphical design elements make it easy to use the program as an expert or a beginner

- Automated workflows can guide you through the process from start to finish

- Highly accurate synthetic control results with automated matching processes that only require minimal human intervention

- Experiments at speed and scale without the hassle of expert teams or expensive bespoke solutions

- Training, troubleshooting, and best practices from the best in the business

- Easy pilots to help your new idea go live in as little as 6 to 8 weeks

Trial Run stands out from other solutions by offering a transparent methodology and easily explainable recommendations. Trial Run utilizes a cutting-edge technique called “synthetic control” for matching, ensuring precise results. Trial Run can be used as a SaaS offering that is easily scalable based on demand and can be hosted on the cloud of customer’s choice. With Trial Run software, customers have unlimited test capabilities, enabling them to design and measure numerous initiatives without any restrictions. Finally, Trial Run success is proven in enterprises, with over 1,000 use cases deployed on our platform.

How do I get started?

Are you ready to implement cutting-edge technology to help you build cost-effective and efficient experiments that provide you with the data you need to make decisions?

If you want to achieve successful Trial Run implementation, get started on the AWS Marketplace.

Interested in learning more about how Fractal can help you implement Trial Run, contact us to get in touch with one of our experts.

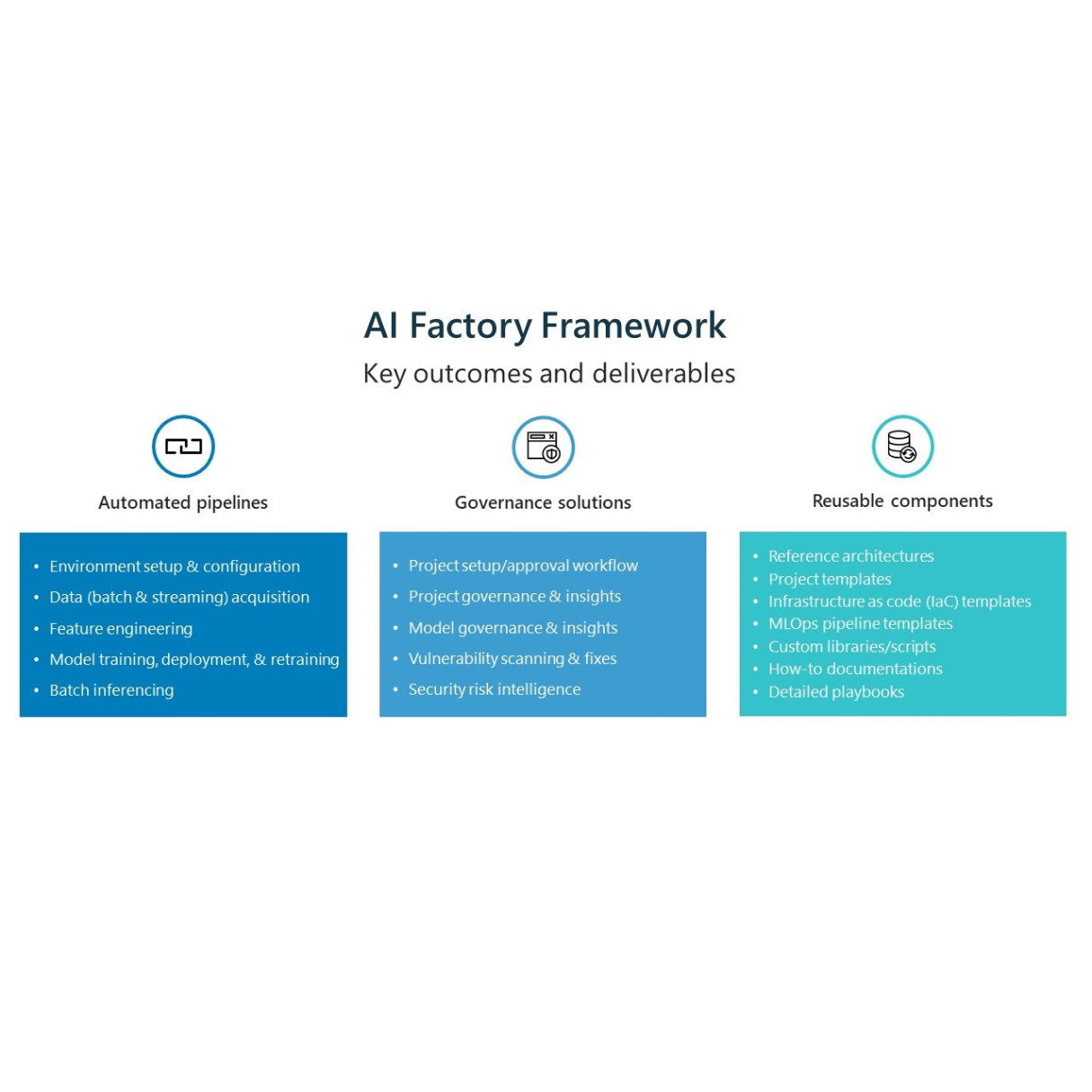

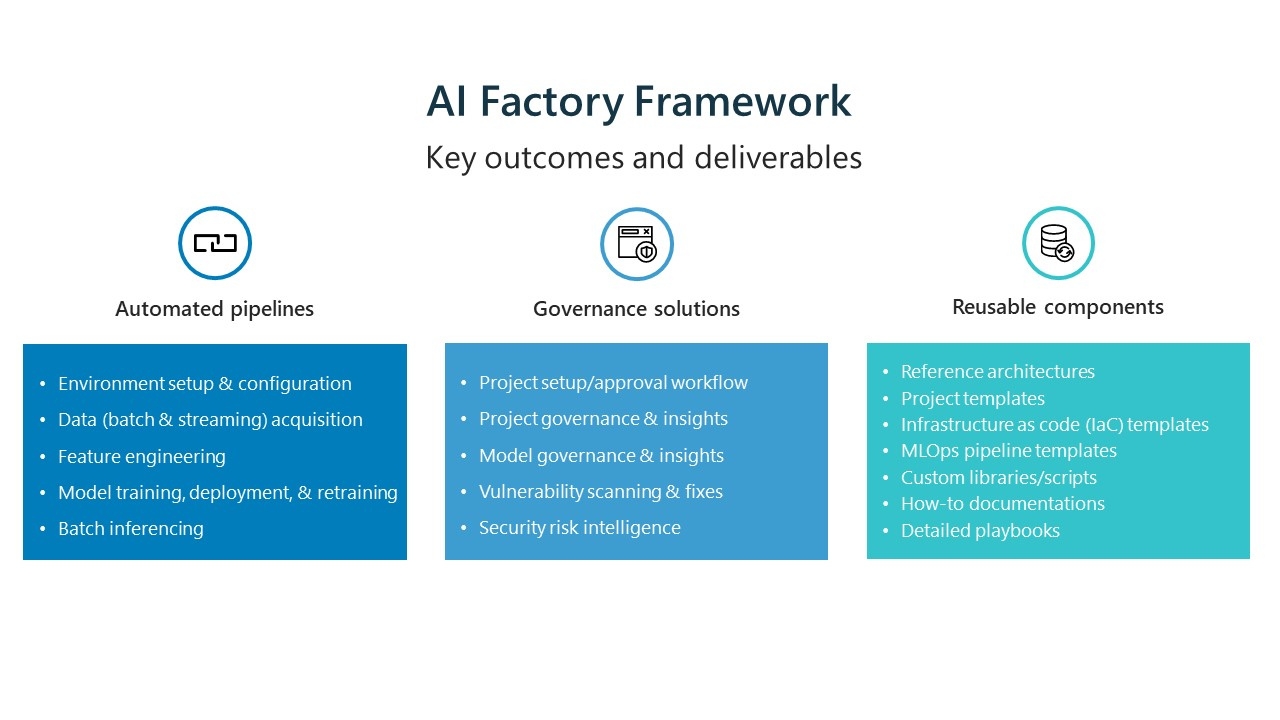

AI Factory Framework benefits

AI Factory Framework benefits